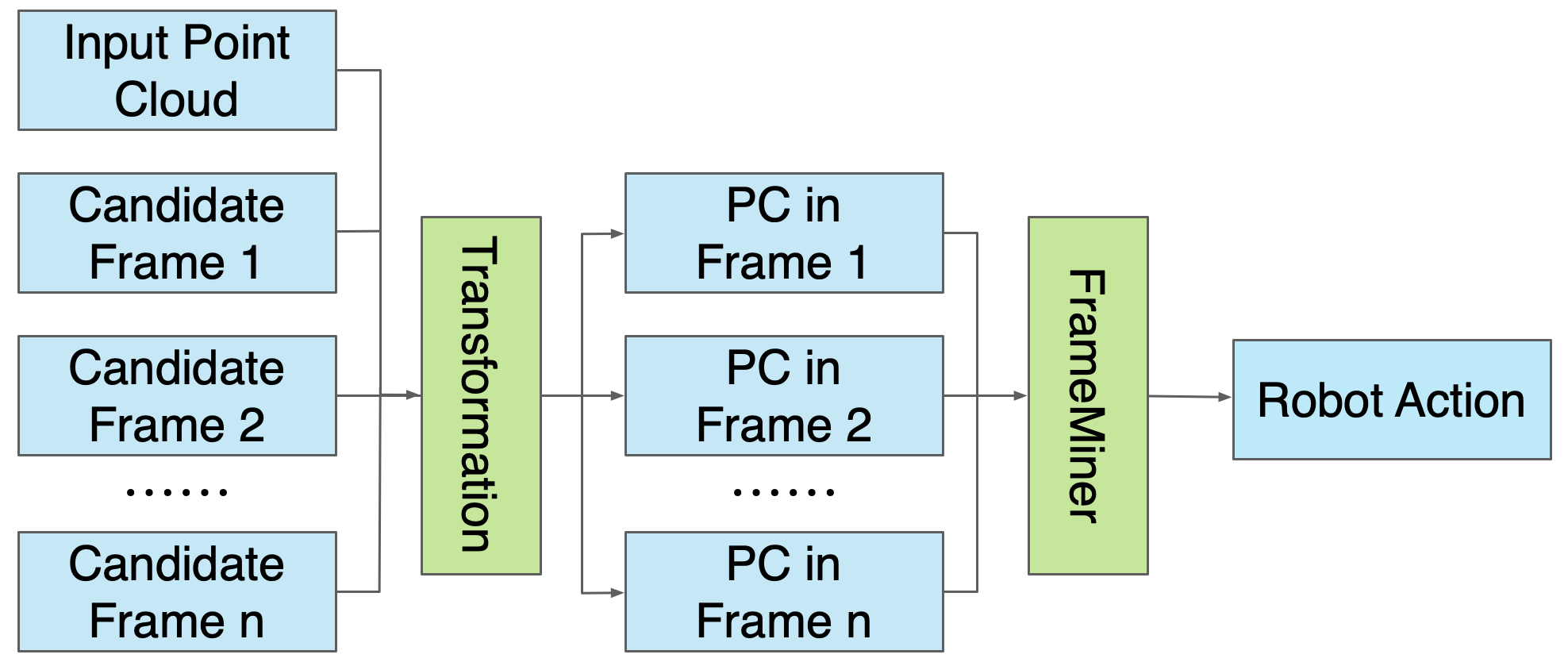

We study how choices of input point cloud coordinate frames impact learning of manipulation skills from 3D point clouds. There exist a variety of coordinate frame choices to normalize captured robot-object-interaction point clouds. We find that different frames have a profound effect on agent learning performance, and the trend is similar across 3D backbone networks. In particular, the end-effector frame and the target-part frame achieve higher training efficiency than the commonly used world frame and robot-base frame in many tasks, intuitively because they provide helpful alignments among point clouds across time steps and thus can simplify visual module learning. Moreover, the well-performing frames vary across tasks, and some tasks may benefit from multiple frame candidates. We thus propose FrameMiners to adaptively select candidate frames and fuse their merits in a task-agnostic manner. Experimentally, FrameMiners achieves on-par or significantly higher performance than the best single-frame version on five fully physical manipulation tasks adapted from ManiSkill and OCRTOC. Without changing existing camera placements or adding extra cameras, point cloud frame mining can serve as a free lunch to improve 3D manipulation learning.

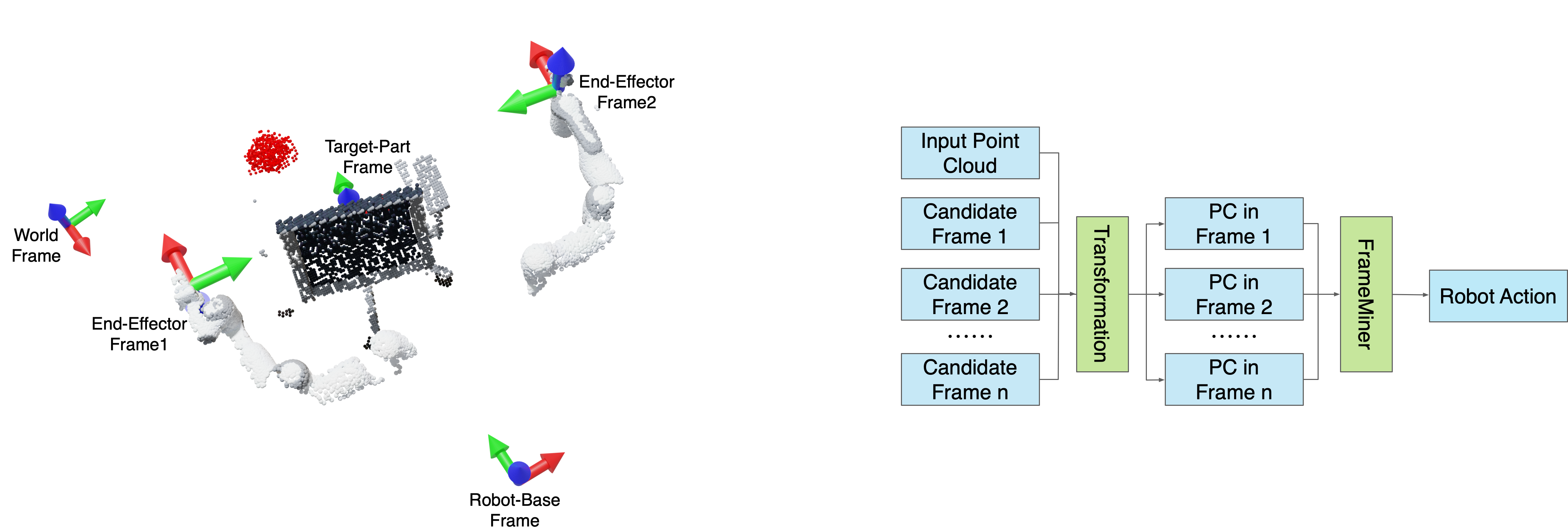

Input point clouds can be represented in various coordinate frames. Different coordinate frames may

provide different canonicalizations and alignments across time steps, which may simplify network training.

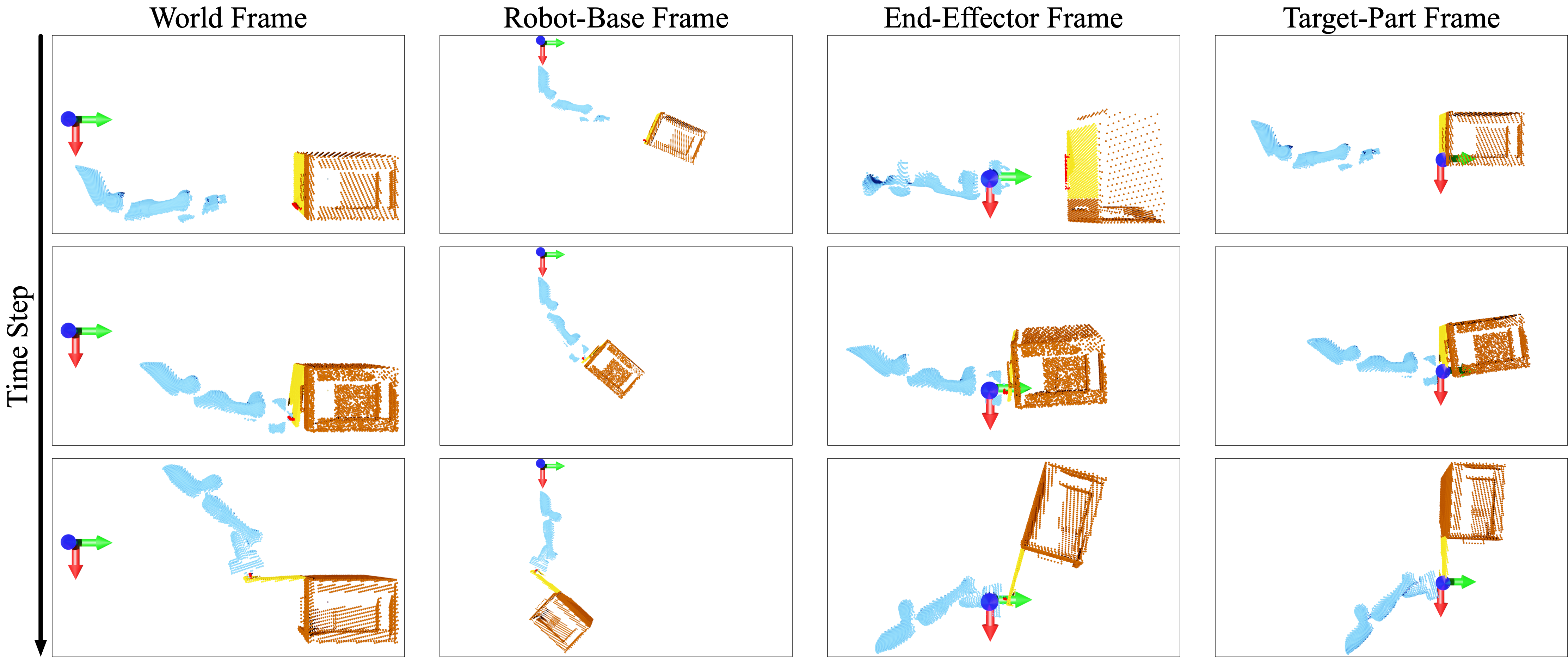

The figure visualizes three point clouds (three time steps) of an OpenCabinetDoor trajectory. Each row

shows the same point cloud represented in different coordinate frames.

Input point clouds can be represented in various coordinate frames. Different coordinate frames may

provide different canonicalizations and alignments across time steps, which may simplify network training.

The figure visualizes three point clouds (three time steps) of an OpenCabinetDoor trajectory. Each row

shows the same point cloud represented in different coordinate frames.

We find that the choice of coordinate frame has a profound impact on point cloud-based object manipulation

learning. In particular, the end-effector frame and the target-part frame lead to much better sample

efficiency than the widely-used world frame and robot-base frame on many tasks. The observation holds across

different 3D point cloud-based visual backbones, such as PointNet and SparseConv.

We find that the choice of coordinate frame has a profound impact on point cloud-based object manipulation

learning. In particular, the end-effector frame and the target-part frame lead to much better sample

efficiency than the widely-used world frame and robot-base frame on many tasks. The observation holds across

different 3D point cloud-based visual backbones, such as PointNet and SparseConv.

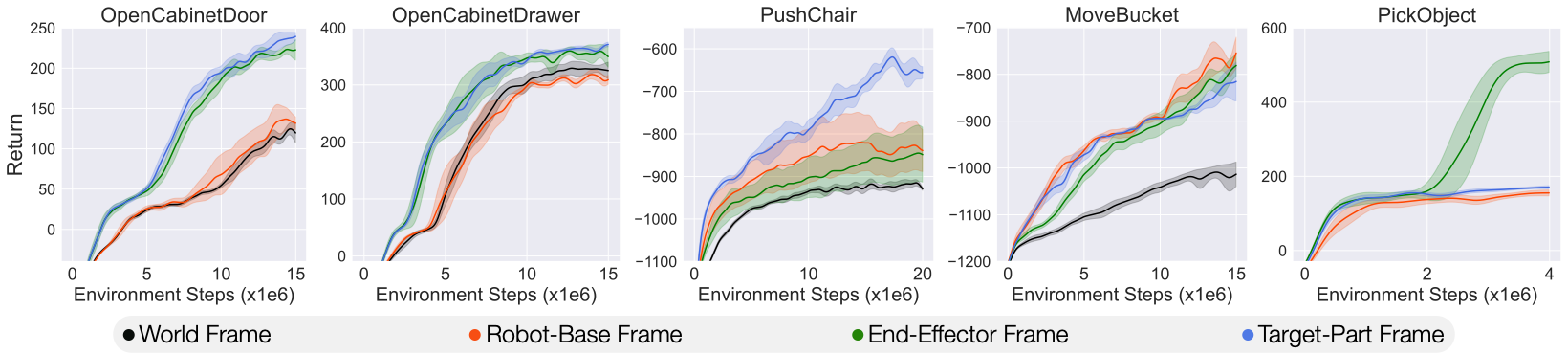

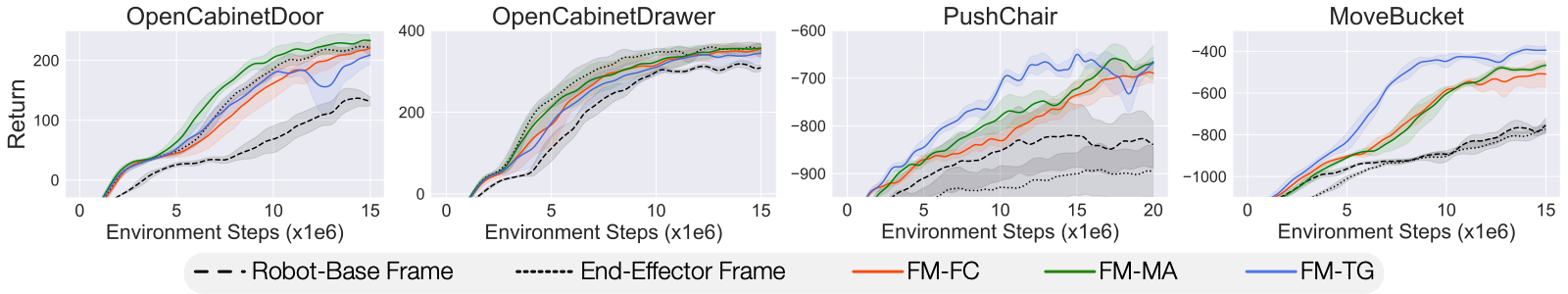

Single-frame baselines (black dashed lines) vs. FrameMiners (colored solid lines). On single-arm

tasks (i.e., OpenCabinetDoor/Drawer), our FrameMiners perform on par with the end-effector frame, which

suggests that FrameMiners can automatically select the best single frame. On dual-arm tasks, our

FrameMiners significantly outperform single-frame baselines, demonstrating the advantage of coordination

between multiple coordinate frames. While it matters to fuse information from multiple frames, the

specific FrameMiner to choose does not create much performance difference.

Single-frame baselines (black dashed lines) vs. FrameMiners (colored solid lines). On single-arm

tasks (i.e., OpenCabinetDoor/Drawer), our FrameMiners perform on par with the end-effector frame, which

suggests that FrameMiners can automatically select the best single frame. On dual-arm tasks, our

FrameMiners significantly outperform single-frame baselines, demonstrating the advantage of coordination

between multiple coordinate frames. While it matters to fuse information from multiple frames, the

specific FrameMiner to choose does not create much performance difference.

@inproceedings{liu2022frame,

title={Frame Mining: a Free Lunch for Learning Robotic Manipulation from 3D Point Clouds},

author={Liu, Minghua and Li, Xuanlin and Ling, Zhan and Li, Yangyan and Su, Hao},

booktitle={6th Annual Conference on Robot Learning},

year={2022}

}